Award Review Assistants: AI Tools That Extract Compliance Details Automatically

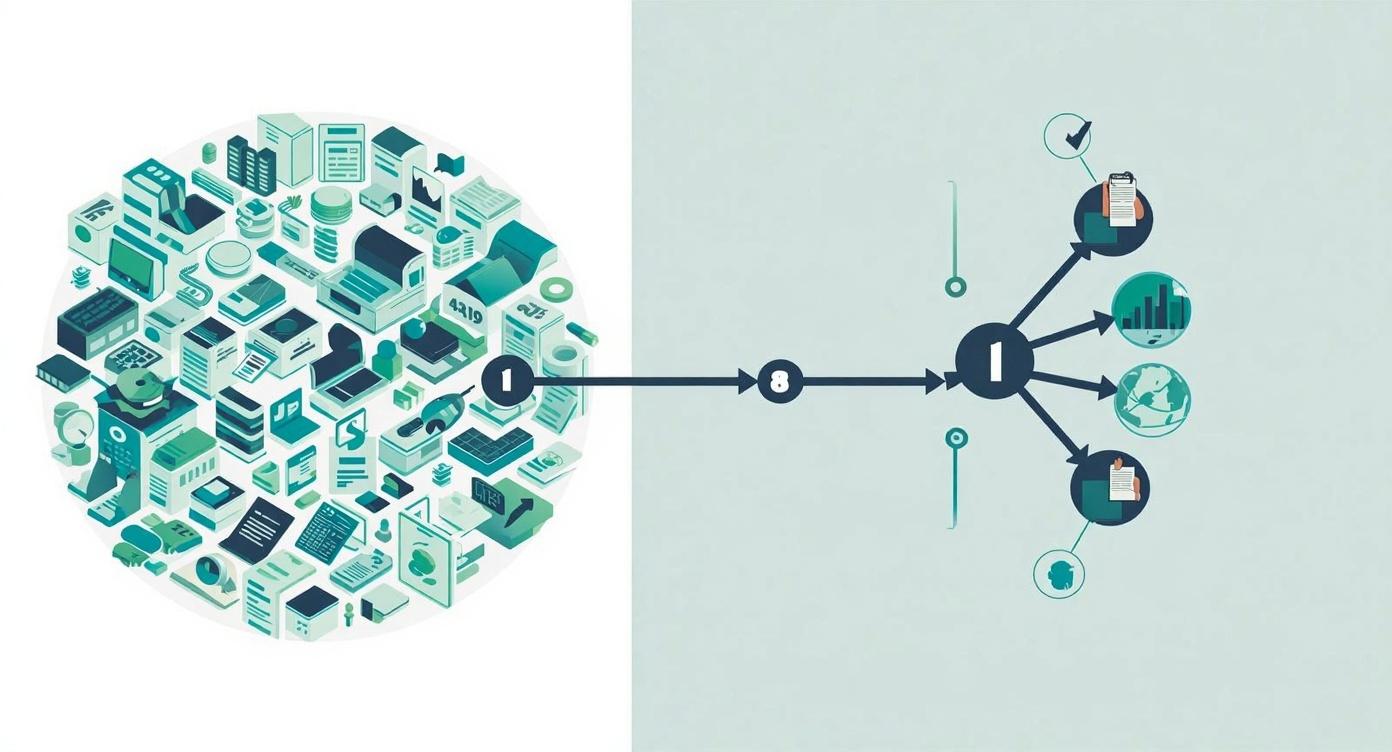

When your development director opens a 47-page federal grant award agreement with dozens of embedded compliance requirements scattered throughout dense legal text, they face hours of painstaking work highlighting deadlines, extracting budget restrictions, and building spreadsheets to track reporting obligations. AI-powered award review assistants now eliminate this manual drudgery by automatically extracting key compliance details from grant agreements in seconds, transforming what was once a tedious multi-hour task into an automated process that reduces both workload and compliance risk.

Grant compliance has always been one of the most meticulous, detail-oriented aspects of nonprofit management. A single missed reporting deadline or budget restriction violation can jeopardize hundreds of thousands of dollars in funding. Yet the traditional process of reviewing grant award agreements remains stubbornly manual, requiring staff to read through lengthy documents page by page, highlighting key terms, manually entering deadlines into calendars, and hoping nothing slips through the cracks.

Award review assistants represent a fundamental shift in how nonprofits handle this critical work. These AI-powered tools can analyze grant award documents, automatically extract compliance requirements, identify reporting deadlines, flag budget restrictions, and populate tracking systems without human intervention. What once required hours of concentrated attention from skilled grant managers can now happen in seconds, with greater accuracy and completeness than manual review.

This article explores how award review assistants work, what they can extract from grant documents, how they integrate with existing grant management systems, and how nonprofits of all sizes can leverage these tools to reduce administrative burden while strengthening compliance. Whether you're managing a single federal grant or coordinating dozens of foundation awards, understanding these emerging tools can help you make more informed decisions about your grant management technology stack.

We'll examine specific platforms offering award review capabilities, discuss the practical implementation challenges nonprofits face, and provide guidance on when manual review still matters. By the end, you'll understand how to evaluate whether award review assistants make sense for your organization and how to integrate them effectively into your grant management workflow.

The Manual Award Review Problem

Before we explore the solution, it's important to understand why award review has been such a persistent challenge for nonprofits. The problem isn't simply that grant agreements are long, though many federal awards run 40 to 60 pages. The real challenge is that compliance requirements are scattered throughout these documents in inconsistent formats, buried within legal language, and often referenced indirectly through citations to federal regulations.

Consider a typical federal grant awarded under 2 CFR 200, the Uniform Guidance that governs federal grant administration. The award agreement might include the base grant amount on page three, reference matching requirements in a footnote on page seven, specify reporting deadlines in an attachment on page 32, detail allowable cost categories in section 12, require specific audit procedures in section 18, and mandate data collection protocols in appendix B. A grant manager reviewing this document manually must read every page carefully, create their own tracking system, and hope they've caught every critical detail.

The stakes are high. Missing a quarterly report deadline can trigger payment holds that disrupt program operations. Overlooking a budget modification approval requirement can lead to audit findings. Failing to track match obligations accurately can result in disallowed costs that the nonprofit must repay from unrestricted funds. Yet many nonprofits lack dedicated grant compliance staff, meaning program directors and development officers squeeze award review into already packed schedules, increasing the risk of oversight.

Common Award Review Challenges

Why manual processes create compliance risk

- Compliance details scattered across dozens of pages with no standardized structure

- Technical requirements referenced through regulatory citations that require separate lookup

- Multiple deadline types (application, reporting, spending, closeout) with different calculation methods

- Budget restrictions and allowable cost limitations that vary by funding source and program type

- Time-consuming manual data entry into grant management systems after document review

- Human error risk when transferring information between documents and tracking systems

- Lack of standardized review process across different staff members handling various grants

How Award Review Assistants Work

Award review assistants leverage natural language processing and machine learning to analyze grant documents in ways that go far beyond simple text search. These systems are trained to understand the structure and language patterns common in grant agreements, enabling them to identify compliance-relevant information even when it's expressed in varied ways across different funders and programs.

When you upload a grant award document, the AI system first analyzes the document structure, identifying sections, tables, attachments, and footnotes. It then applies specialized extraction models trained to recognize specific types of compliance information: financial terms, deadline language, reporting requirements, budget restrictions, match obligations, and audit provisions. Unlike keyword search, which only finds exact matches, these models understand semantic meaning, so they can identify a reporting requirement whether it's phrased as "quarterly progress reports due 30 days after quarter end" or "grantees shall submit program updates on a quarterly basis within one month of period close."

The sophistication of these tools varies considerably. Basic implementations might extract only the most obvious deadline dates and award amounts. More advanced systems, like Instrumentl's Award Review Assistant, can automatically identify over 20 different types of compliance details, including budget period dates, indirect cost rates, matching fund requirements, reporting schedules, payment terms, prior approval requirements, and specific programmatic restrictions.

What makes modern award review assistants particularly valuable is their ability to not just extract information, but also to structure it in actionable formats. Rather than simply highlighting text in the original document, these tools populate database fields, create calendar entries, set up automated reminders, and generate compliance checklists. This transformation from unstructured text to structured data enables automated tracking and monitoring throughout the grant lifecycle.

Extraction Capabilities

- Award amounts and budget period dates

- Reporting deadlines and submission requirements

- Payment schedules and drawdown procedures

- Match and cost-share obligations

- Indirect cost rates and restrictions

- Prior approval requirements

- Budget category allocations

- Audit and compliance provisions

Automation Features

- Automatic calendar entry creation for all deadlines

- Email alerts at configurable intervals before due dates

- Database population with extracted compliance details

- Compliance checklist generation for grant lifecycle

- Budget tracking setup based on award terms

- Document version control and change tracking

- Team notification workflows for compliance activities

The technical approach varies by platform. Some tools use proprietary AI models trained specifically on grant documents. Others leverage general-purpose large language models fine-tuned for grant compliance extraction. The best implementations combine both approaches, using specialized models for structured data extraction and general language models for interpreting complex or unusual provisions that don't fit standard patterns.

Comparing Award Review Platforms

The grant management software landscape includes several platforms with award review capabilities, each taking a somewhat different approach to the challenge. Understanding these differences helps nonprofits select tools that match their specific needs, grant portfolio complexity, and integration requirements.

Instrumentl positions itself as an all-in-one grant discovery, writing, and management platform. Its Award Review Assistant is designed to extract compliance details from federal, state, and foundation awards. The tool automatically identifies key terms and populates Instrumentl's grant management system, which then provides automated deadline reminders and compliance tracking throughout the grant lifecycle. Instrumentl works well for nonprofits seeking an integrated solution that handles the full grant cycle from prospecting through closeout.

Grantable focuses primarily on proposal writing and narrative generation, but includes document analysis capabilities that help extract requirements from RFPs and grant guidelines. While not specifically designed for post-award compliance tracking, Grantable excels at analyzing application requirements during the pre-award phase. Organizations using Grantable typically pair it with separate grant management software for post-award tracking.

Submittable offers grant management features with AI-enhanced capabilities for automating administrative tasks. Its strength lies in workflow automation and team collaboration. Submittable's AI features include automated data verification against third-party databases like IRS records and smart application summaries, though its award review capabilities are less specialized than Instrumentl's dedicated extraction tools.

Newer entrants to the market include platforms like CommunityForce, which emphasizes compliance monitoring with AI-powered systems that automatically track deadlines and reporting requirements. These tools often integrate with existing accounting and database systems, allowing nonprofits to add award review capabilities without replacing their entire grant management infrastructure.

Evaluation Criteria for Award Review Tools

Key factors to consider when comparing platforms

- Extraction accuracy: How reliably does the tool identify all compliance requirements without false positives or missed details?

- Document type compatibility: Does it work with federal grants, foundation awards, corporate giving agreements, and multi-year renewals?

- Integration capabilities: Can it connect with your existing accounting system, CRM, or grant management database?

- Workflow automation: What happens after extraction? Does it create tasks, send reminders, and track completion automatically?

- Human review process: Can staff easily verify and correct AI extractions before they populate production systems?

- Reporting and audit trails: Does the system maintain documentation of what was extracted, when, and by whom for audit purposes?

- Team collaboration features: How does the tool support multiple staff members working with the same grant portfolio?

- Cost structure: Is pricing based on users, grants managed, or feature access? What's included at each tier?

For nonprofits managing primarily federal grants, platforms with deep understanding of 2 CFR 200 compliance requirements offer the most value. Organizations with diverse grant portfolios spanning federal, state, foundation, and corporate funders benefit from tools that can handle varied document formats and compliance frameworks. Smaller nonprofits managing fewer than 10 active grants might find that even basic extraction tools provide significant time savings, while larger organizations managing 50 or more simultaneous awards require enterprise-grade features like multi-user workflows, role-based permissions, and advanced reporting capabilities.

Implementation Considerations

Successfully implementing award review assistants requires more than simply purchasing software. Nonprofits need to prepare their grant documents, train staff on verification procedures, establish quality control processes, and integrate the new tools with existing workflows. Organizations that rush implementation without addressing these factors often end up with underutilized systems that don't deliver expected value.

Document preparation is the first critical step. Award review AI works best with clean, text-based PDF documents. Scanned images, even with OCR, produce less accurate results. Documents with complex tables, unusual formatting, or heavy redaction may require manual pre-processing. Some nonprofits maintain both the original award document and a cleaned version optimized for AI extraction, ensuring they have the official source of record while benefiting from automated processing.

Human verification remains essential, especially during the initial implementation period. AI extraction is highly accurate for standard compliance elements but can struggle with unusual provisions, contradictory language, or highly technical requirements. Best practice involves a two-step process: the AI performs initial extraction, then a knowledgeable grant manager reviews the results, correcting any errors and flagging unusual provisions for deeper analysis. Over time, as staff gain confidence in the system's accuracy for specific funder types, verification can become more selective.

Integration with existing systems determines whether award review assistants truly reduce workload or simply add another tool to manage. The goal is seamless data flow from document upload through extraction to your grant management database, accounting system, and team calendars. Organizations should map out this data flow during vendor evaluation, confirming that the award review tool can push extracted data to systems you already use. Manual data re-entry between systems defeats the purpose of automation.

Setting Up Your Award Review System

Steps for successful implementation

Phase 1: Preparation (2-4 weeks)

- Inventory existing grant portfolio and identify priority awards for initial processing

- Review and organize grant documents, ensuring you have clean digital copies

- Map current grant tracking processes to identify integration requirements

- Define team roles for document upload, verification, and compliance monitoring

Phase 2: Initial Setup (1-2 weeks)

- Configure platform settings, user permissions, and notification preferences

- Set up integrations with accounting systems, calendars, and grant databases

- Establish verification workflows and quality control checkpoints

- Train core team members on document upload and extraction review procedures

Phase 3: Pilot Testing (4-6 weeks)

- Process a subset of grants (5-10 awards) to test extraction accuracy

- Compare AI extractions against manual review to identify patterns and gaps

- Refine verification procedures based on actual extraction results

- Measure time savings and identify any workflow friction points

Phase 4: Full Deployment (Ongoing)

- Roll out to entire grant portfolio, processing both new awards and historical agreements

- Expand training to all team members who work with grants

- Establish regular audits to ensure extraction accuracy remains high

- Document lessons learned and refine processes as you gain experience

Change management deserves special attention. Grant staff who have spent years developing manual review processes may initially resist automated tools, fearing loss of control or concerned about AI accuracy. Successful implementations involve these staff members from the beginning, positioning the tool as a way to free them from tedious data entry so they can focus on higher-value compliance analysis and funder relationship management. Demonstrating time savings with pilot grants builds confidence more effectively than any vendor presentation.

Cost considerations extend beyond software subscription fees. Factor in staff time for initial setup, document preparation, ongoing verification, and system maintenance. For most organizations, these implementation costs are recovered within the first year through time savings on award review, but planning for this investment upfront prevents surprises. Organizations managing fewer than five active grants annually may find that manual processes remain more cost-effective than automated tools, while those juggling 15 or more awards typically see immediate positive ROI.

When Manual Review Still Matters

Award review assistants excel at extracting standard compliance elements from well-structured documents, but they have limitations that require human judgment and expertise. Understanding when to rely on AI and when to apply deeper manual analysis is critical for maintaining compliance while benefiting from automation.

Unusual or complex provisions often require human interpretation. For instance, a federal award might include a special condition that modifies standard reporting requirements based on achieving specific program milestones. The AI might extract the standard reporting schedule but miss the conditional modification. Similarly, awards with contradictory language, where one section appears to conflict with another, require experienced grant managers to identify the inconsistency and seek clarification from the funder.

High-stakes awards warrant additional manual scrutiny regardless of AI confidence levels. A multi-million dollar federal award that represents 40 percent of your organization's annual budget deserves thorough human review even if the AI extraction appears complete. The potential cost of missing a critical requirement far exceeds the time investment in careful verification.

New funder relationships present another scenario where manual review adds value beyond extraction accuracy. The first award from a new foundation or government agency provides insights into that funder's priorities, communication style, and compliance expectations that inform future relationship management. While the AI can extract the requirements, a skilled development professional reading the full document may notice language indicating flexibility on certain provisions or red flags suggesting potential challenges ahead.

Situations Requiring Enhanced Manual Review

When human expertise remains essential

- Awards over $500,000 or representing more than 20 percent of organizational budget

- First-time awards from new funders with unfamiliar compliance frameworks

- Documents with extensive special conditions or program-specific requirements

- Multi-year awards with varying terms across different budget periods

- Collaborative grants involving multiple organizational partners with different roles

- Awards with complex cost-sharing arrangements or unusual budget restrictions

- Documents that reference external compliance frameworks not included in the agreement itself

- Awards with conditional requirements based on performance metrics or external factors

- Renewals that significantly modify terms from previous award periods

The most effective approach combines AI efficiency with human expertise. Use award review assistants to handle the routine extraction work they do well, extracting dates, amounts, and standard requirements from straightforward documents. This frees your grant managers to focus their time on complex analysis, relationship management, and strategic compliance planning where human judgment creates the most value.

Establish clear criteria for when automatic extraction is sufficient and when enhanced manual review is required. Many organizations use a tiered approach based on award size, funder type, and document complexity. Small foundation grants under $50,000 with standard terms might receive AI extraction with spot-check verification. Federal awards over $250,000 get full AI extraction plus complete manual review. Multi-million dollar awards receive both AI extraction and review by multiple staff members including program leadership and legal counsel.

Measuring Success and ROI

Quantifying the value of award review assistants helps justify the investment and identify opportunities for further optimization. Nonprofits should track both time savings and quality improvements to build a complete picture of ROI.

Time savings are the most obvious metric. Before implementing automated tools, measure how long staff currently spend reviewing award documents, entering compliance details into tracking systems, and setting up monitoring workflows. After implementation, track the same activities. Many organizations report that award review time drops from 2-4 hours per grant to 30-60 minutes, with the remaining time focused on verification rather than data entry. For an organization managing 20 grants annually, this translates to 30-70 hours of recovered staff time, equivalent to nearly one month of additional capacity for higher-value work.

Quality improvements matter as much as speed. Track compliance metrics before and after implementation: missed deadlines, late reports, compliance findings in audits, and funder inquiries about requirements. Even small improvements in these areas can have significant financial impact. A single missed reporting deadline that triggers payment holds can cost more than several years of software subscription fees.

Staff satisfaction provides qualitative but important feedback. Survey your grants team about their experience with manual versus automated processes. Do they feel more confident that nothing is being missed? Has the reduction in tedious data entry reduced burnout and improved job satisfaction? These benefits, while harder to quantify, contribute to staff retention and overall organizational health.

Key Performance Indicators for Award Review Tools

Metrics to track for ongoing evaluation

- Time per award review: Average staff hours from document receipt to complete tracking setup

- Extraction accuracy rate: Percentage of AI-extracted details that require no correction during verification

- Missed deadline rate: Number of reporting or compliance deadlines not completed on time

- Compliance findings: Audit or monitoring findings related to grant administration

- Staff hours reallocated: Time formerly spent on award review now available for other work

- Cost per grant managed: Total platform costs divided by number of active grants tracked

- User adoption rate: Percentage of grant team members actively using the system for all new awards

- Integration effectiveness: Percentage of extracted data that flows automatically to downstream systems without manual re-entry

Benchmark your results against your own baseline rather than comparing to other organizations, since grant portfolio complexity varies widely. A small nonprofit managing straightforward foundation grants will see different results than a large organization administering complex federal awards. What matters is whether the tool is delivering value relative to your specific needs and investment.

Review these metrics quarterly during the first year of implementation, then semi-annually once processes stabilize. Use the data to refine your verification procedures, identify documents that need special handling, and make informed decisions about expanding or adjusting your use of award review automation. This ongoing evaluation ensures the tools continue to serve your organization's evolving grant management needs.

Getting Started With Award Review Assistants

If you're managing more than a handful of grants and spending significant staff time on award review, automated extraction tools likely make sense for your organization. The question is not whether to adopt these tools, but which platform fits your needs and how to implement it effectively.

Start by auditing your current grant portfolio. How many active awards do you manage? What types of funders (federal, state, foundation, corporate)? How complex are your typical award agreements? This assessment helps you identify platforms with the right capabilities for your portfolio mix. Organizations managing primarily federal grants need tools with deep 2 CFR 200 expertise. Those working with diverse foundation funders need flexibility to handle varied document formats and compliance frameworks.

Request demos from multiple vendors and come prepared with actual grant documents from your portfolio. Ask the vendor to demonstrate extraction on your real documents, not generic examples. This reveals how well the tool handles your specific funder types and document formats. Pay particular attention to how the verification process works, what happens when the AI is uncertain about an extraction, and how corrections flow back into the system's learning.

Consider starting with a limited pilot rather than immediately processing your entire grant portfolio. Select 5-10 diverse awards that represent different funder types and complexity levels. Process these through the award review assistant while simultaneously conducting manual review using your traditional process. Compare results to evaluate extraction accuracy, identify gaps, and refine verification procedures before expanding to your full portfolio.

Integrate award review automation into a broader strategic approach to AI adoption. These tools work best when they're part of a comprehensive grant management system rather than standalone solutions. Think about how award review connects to grant prospecting, proposal development, budget monitoring, and reporting. Platforms that handle the full grant lifecycle often provide better integration and workflow continuity than best-of-breed point solutions.

Build internal expertise rather than depending solely on vendor support. Designate a grants technology lead who becomes deeply familiar with the platform, understands its capabilities and limitations, and can train other staff members. This person serves as the bridge between your grants team and the technology, ensuring the tools are used effectively and issues are resolved quickly. Many organizations underestimate the importance of this role, leading to underutilized systems and frustrated users.

Don't overlook the value of cultivating AI champions within your grants team who can help drive adoption and identify opportunities for optimization. These champions understand both grant compliance and technology, making them invaluable for bridging the gap between automated extraction and practical grant management.

Conclusion

Award review assistants represent a significant leap forward in grant administration efficiency. By automating the tedious work of extracting compliance details from grant documents, these tools free nonprofit staff to focus on higher-value activities: building funder relationships, improving program outcomes, and ensuring mission impact. The technology has matured to the point where extraction accuracy rivals or exceeds manual review for standard compliance elements.

Yet these tools are not magic solutions that eliminate the need for grant management expertise. They're powerful assistants that handle routine tasks exceptionally well, allowing skilled grant professionals to concentrate their expertise where it matters most. The organizations seeing the greatest benefit from award review automation are those that combine AI efficiency with human judgment, using technology to amplify staff capabilities rather than replace them.

As grant compliance requirements continue to grow more complex and funder expectations for transparency increase, manual award review processes become increasingly unsustainable. Nonprofits that adopt award review assistants now are positioning themselves for long-term success, building infrastructure that scales with their grant portfolio growth while maintaining the compliance rigor funders expect.

The question for most nonprofits is not whether to automate award review, but when and how. The tools are available, proven, and increasingly affordable. Organizations managing significant grant portfolios can no longer afford the time, risk, and opportunity cost of purely manual processes. Award review assistants have moved from emerging technology to essential infrastructure for effective grant management.

Ready to Transform Your Grant Management?

Let's discuss how AI-powered award review tools can fit into your organization's grant management strategy and help you build a more efficient, compliant, and sustainable grants program.